Azure Pipelines for the Open-Source Developer

Azure DevOps (AzDO) provides a variety of capabilities for developers. It is a service that is used by many large enterprise customers of Microsoft but especially the CI/CD service Azure Pipelines offers a set of amazing and free-of-charge capabilities for the Open-Source Software (OSS) enthusiast. My favorites among others are:

- 10 Free parallel jobs for public projects. I have not compared that recently with other services, but I last time looked that was best in class. Even larger commercial projects will seldom need that number and it is more than sufficient for most OSS projects including regular pull request verification even platform matrix integration test runs.

- It is a Managed service that at the same time brings the flexibility to the table that comes with a rich eco-system of plugins. No stressful and often insecure Jenkins self-hosting anymore. The plugins are pulled from a managed and governed marketplace. This includes service integrations like SonarCloud, Artifactory or AWS and Microsoft provides first class GitHub and Azure integration as well.

- Some fancy features that I wished I had back in the day when I was still a Jenkins user, like file-path based pipeline triggers that allow to have multiple pipelines on the same GIT repository. Sounds tiny but I have split far too many GIT repositories back in the day just to shrink the monolith Jenkins build I was struggling with.

For me personally, the greatest strength is the combination of the Jenkins like flexibility with a managed service experience like Travis CI but there is something for everybody, I guess.

There are many good reasons to use Azure Pipelines, so let's get started! In this tutorial we will:

- Setup Azure DevOps.

- Build a sample Java Spring Boot project with Maven.

- Analyze the code with SonarCloud.

- Use SonarCloud and Azure Pipelines as GitHub status checks to protect the

masterbranch.

We will focus in this run-through on managed Agent pools. Agents in AzDO are like Jenkins nodes, they execute the pipeline steps. Microsoft offers hosted ones (again up to ten in parallel for free) but it is possible as well to host custom agents in the cloud or on-premises.

The core service though (like Jenkins master) is in the cloud. As with the plugin marketplace, it is the beauty of AzDO that I get the flexibility of Agents wherever I want, while at the same time have the availability and security of a managed service.

Setup Azure DevOps

Go to https://dev.azure.com and click Start free (with GitHub). After the login, AzDO will ask for the name of the organization to be created and a hosting location. Inside the organization we can then create projects. In the project we have backlogs, GIT repositories, wikis, dashboards, artifact repositories and of course pipelines.

For instance, I have in my organization kaizimmerm a project tutorials in which I have again several build pipelines. There are diverse options to manage user rights in these structures (its and enterprise tool), but we will keep it simple for now.

Build a sample Java Spring Boot project with Maven

We use my Spring Boot Basics app as an example and create in the repository an azure-pipelines.ymlfile. The file includes two build steps. The Cache step is optional, but I would highly recommend it as we are running on hosted Agents where all data is erased between runs.

Especially Maven run performance with its tendency to download half the internet can be improved dramatically with caching the .m2/repository folder which is what we are going to do.

1pool:

2 vmImage: "ubuntu-latest"

3

4variables:

5 MAVEN_CACHE_FOLDER: $(Pipeline.Workspace)/.m2/repository

6 MAVEN_OPTS: '-Dmaven.repo.local=$(MAVEN_CACHE_FOLDER)'

7

8steps:

9 - task: Cache@2

10 displayName: Cache Maven local repo

11 inputs:

12 key: 'maven | "$(Agent.OS)" | **/pom.xml'

13 restoreKeys: |

14 maven | "$(Agent.OS)"

15 maven

16 path: $(MAVEN_CACHE_FOLDER)

17 - task: Maven@3

18 displayName: 'Maven build'

19 inputs:

20 mavenPomFile: "pom.xml"

21 mavenOptions: "-Xmx3072m"

22 javaHomeOption: "JDKVersion"

23 jdkVersionOption: "1.11"

24 jdkArchitectureOption: "x64"

25 publishJUnitResults: true

26 codeCoverageToolOption: 'JaCoCo'

27 testResultsFiles: "**/surefire-reports/TEST-*.xml"

28 goals: "package $(MAVEN_OPTS)"

There are many more advanced topics we can do in pipelines of course, like organizing steps into multiple jobs, define stages or run matrix testing strategies. I might cover this in a later article.

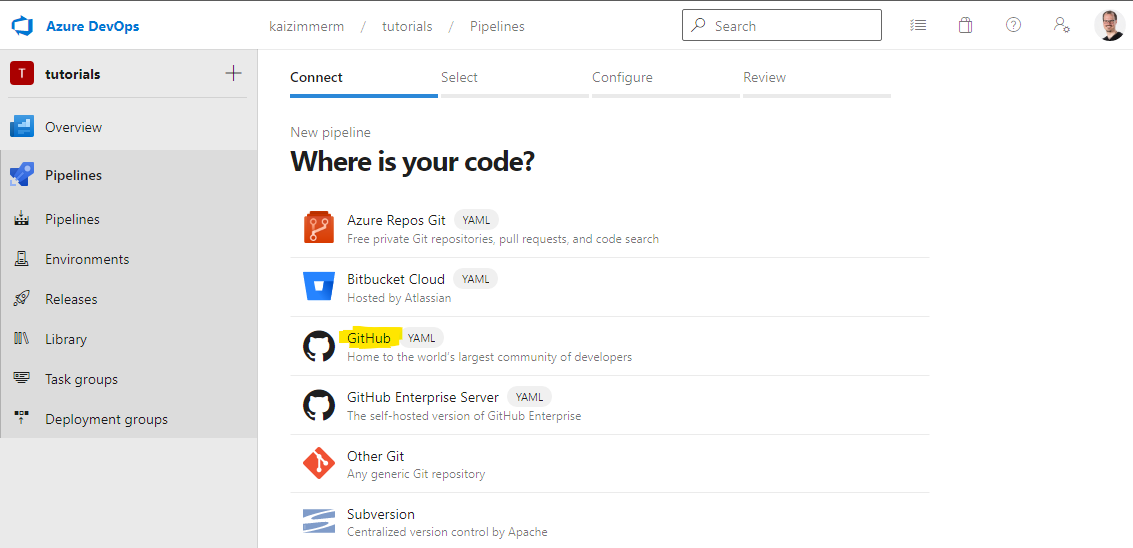

Back to our simple pipeline though. The next step is to go into our AzDO project under pipelines and select New pipeline and then Github.

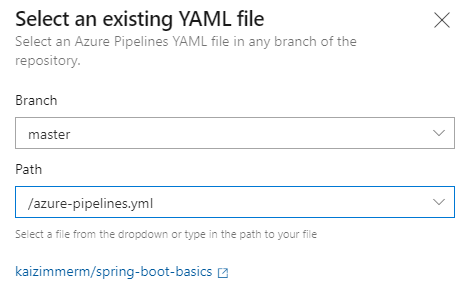

Now we can select the repository, in my case that is kaizimmerm/spring-boot-basics . Next, AzDO will request to select a branch and location where the yml is.

Note: we configure the trigger for the pipeline in the yml itself. The branch selected here is purely to find the yml. This comes in handy when the yml is still work in progress and not yet on master and we want to test it. As we have not configured a trigger, the pipeline will react to commits on all branches by default.

After confirmation we are good to run the pipeline.

Analyze the code with SonarCloud

While our code is built by AzDO we can add static code analysis to our pipeline. The first thing we need to do is to create a SonarCloud account. Like with AzDO, it is possible to login with GitHub.

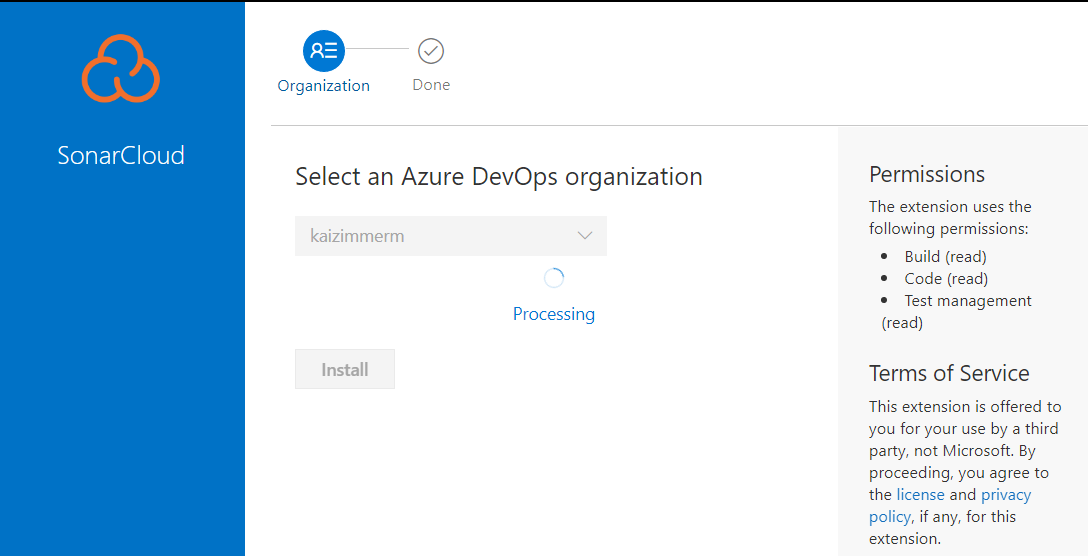

Next step is to install the SonarCloud AzDO plugin from the marketplace. Simply press the button "Get it free".

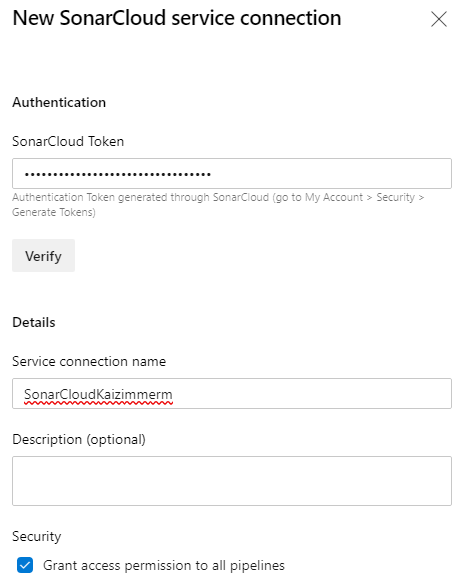

Now we can create the managed connection between AzDO and SonarCloud (no more passwords somewhere on the Jenkins disk...). Simply click inside the project in AzDO on Project settings in the lower left corner, then under Service connections the button New service connection. Select SonarCloud and next. Here we need to provide a token from SonarCloud and give the connection a name.

Finally, we can add the scan to our pipeline. The field SonarCloud is the previously defined connection name. The field organization is the key of your organization in SonarCloud.

1pool:

2 vmImage: "ubuntu-latest"

3

4variables:

5 MAVEN_CACHE_FOLDER: $(Pipeline.Workspace)/.m2/repository

6 MAVEN_OPTS: '-Dmaven.repo.local=$(MAVEN_CACHE_FOLDER)'

7

8steps:

9 - task: SonarCloudPrepare@1

10 displayName: 'Prepare analysis configuration'

11 inputs:

12 SonarCloud: 'SonarCloudKaizimmerm'

13 organization: 'kaizimmerm-github'

14 scannerMode: Other

15 - task: Cache@2

16 displayName: Cache Maven local repo

17 inputs:

18 key: 'maven | "$(Agent.OS)" | **/pom.xml'

19 restoreKeys: |

20 maven | "$(Agent.OS)"

21 maven

22 path: $(MAVEN_CACHE_FOLDER)

23 - task: Maven@3

24 displayName: 'Maven build'

25 inputs:

26 mavenPomFile: "pom.xml"

27 mavenOptions: "-Xmx3072m"

28 javaHomeOption: "JDKVersion"

29 jdkVersionOption: "1.11"

30 jdkArchitectureOption: "x64"

31 publishJUnitResults: true

32 sonarQubeRunAnalysis: true

33 sqMavenPluginVersionChoice: 'latest'

34 codeCoverageToolOption: 'JaCoCo'

35 testResultsFiles: "**/surefire-reports/TEST-*.xml"

36 goals: "package $(MAVEN_OPTS)"

37 - task: SonarCloudPublish@1

38 displayName: 'Publish results on build summary'

39 inputs:

40 pollingTimeoutSec: '300'

Pull request verification

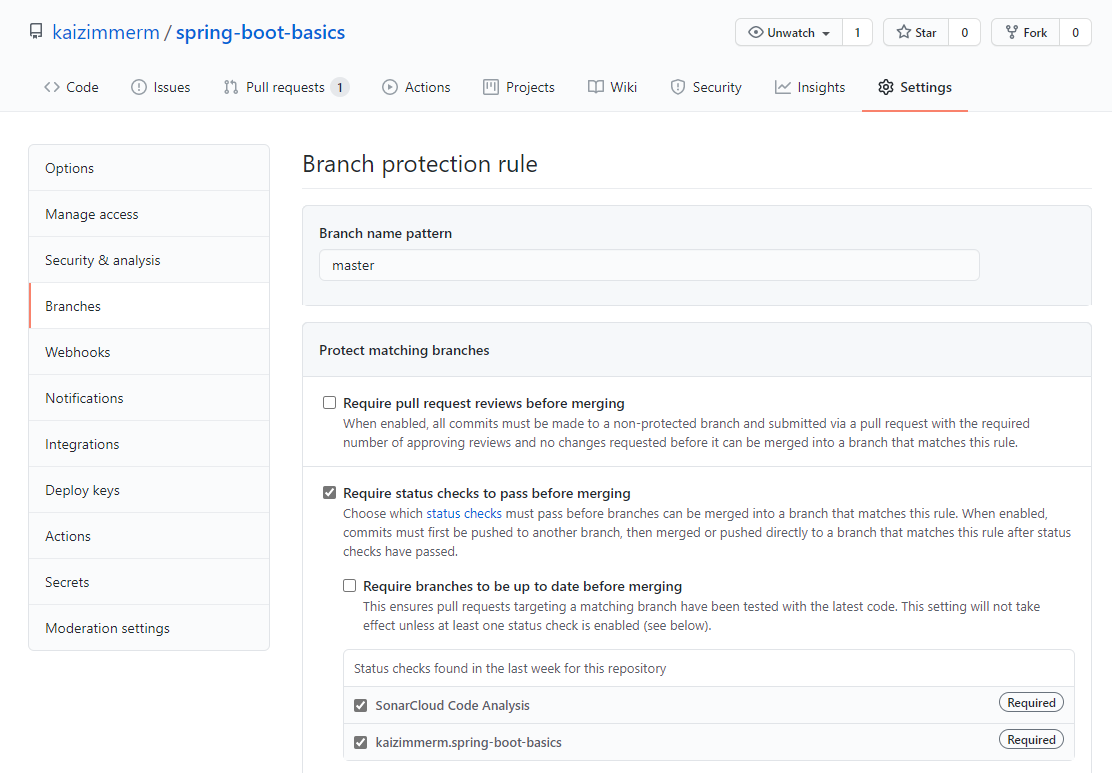

In GitHub we can configure the branch protection in the repository under Settings → Branches → Add rule. We configure this for the master branch and enable the Status checks SonarCloud and the build pipeline.

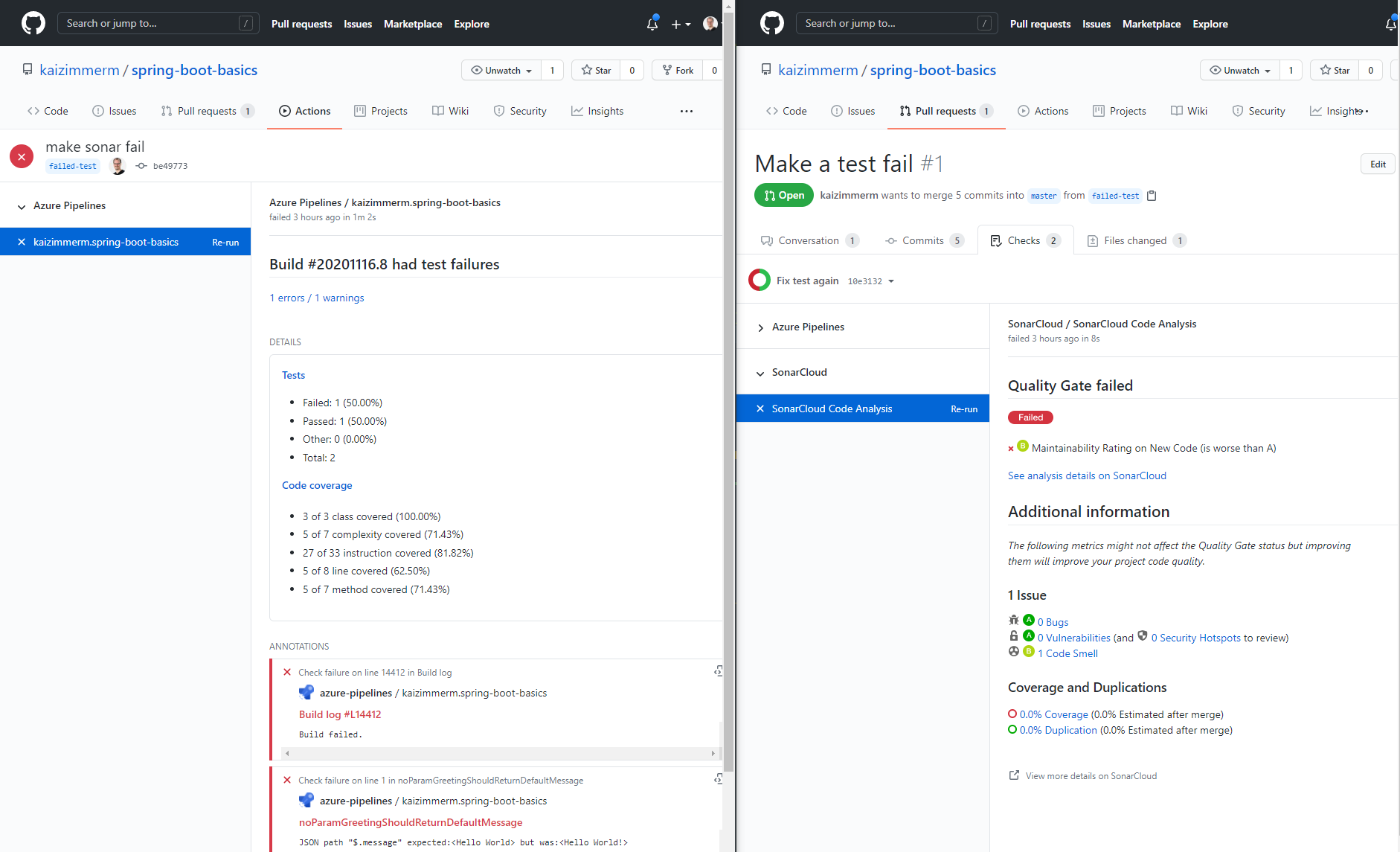

As shown in the next screenshot both a failure of the SonarCloud quality gate or the build itself will mark the pull request accordingly.

Next steps

This was a first introduction to the Azure Pipelines' universe. There are so many capabilities that I could not address here but I will cover more topics in the future.

Until then, the YAML schema reference from the Azure Pipelines documentation is an informative read to have an overview about what else is possible.

I hope it came across what I love about Azure DevOps: an enterprise feature set in a hassle-free managed service with (almost) Jenkins like flexibility that is free for Open-Source projects. It can't get much better than that.

Happy DevOps!